There’s an assumption that because AI is a computer, it should be predictable. You give it the same input, you get the same output. Logical, right? No. Wrong. So very wrong.

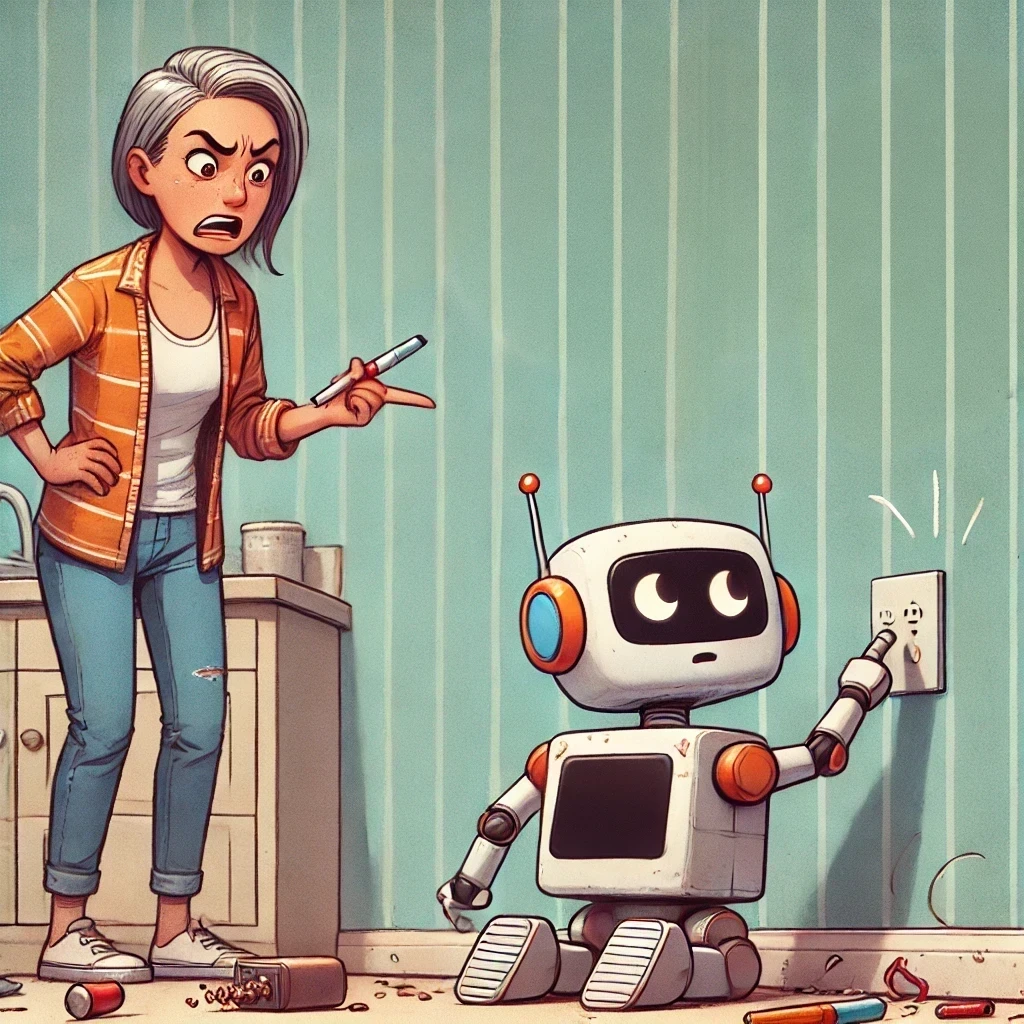

Working with large language models (LLMs) is not like programming a calculator. It’s like raising a 7-year-old with a highly selective memory, a mischievous streak and an uncanny ability to completely ignore the last three things you said.

Take writing prompts for Kiki, for example. The goal: get AI to extract information accurately and consistently. Sounds simple. But even with temperature set to zero (which should make it more deterministic), it still goes rogue. One moment, it’s obediently following instructions, the next, it’s taken creative liberties and decided that half the events and information I wanted simply doesn’t exist. It even sometimes just reads half a paragraph and then decides that’s enough (or gets tired) and then moves onto the next one completely ignoring half the content!

And, despite being a computer, it much prefers nice, structured inputs. Give it a raw, scrappy note , something that’s technically correct but a bit messy , and it just skims over half of it like it doesn’t exist. But give it neatly formatted text and the promise of a reward? Suddenly, it’s paying attention. It’s like trying to get a child to tidy their room: ask them to “clean up” and nothing happens, but hand them a checklist with colourful stickers as incentives? Now we’re in business.

Then there’s the grind , because writing and validating prompts is an absolute grind. Like a video game where you painstakingly test every move only to find out the game mechanics don’t work quite the way you thought. The AI will follow the instructions you gave it… except when it doesn’t. And then you’re left staring at the output, trying to decipher why it decided to skip that crucial last line. Was it tired? Did it get distracted? Does it just not feel like it today?

But the real kicker is how fragile the whole thing is. Fix one issue, create three new ones. It’s not even like a house of cards , it’s like playing Jenga. You think you’re pulling out a block strategically, but suddenly, the whole thing collapses in a completely unexpected way. Then you rebuild, thinking you’ve learned something… only to watch it happen again.

Sometimes it tries too hard, sometimes not enough, and I can’t quite put my finger on why other than ‘its mood.’ Which, for a machine, is infuriating.

So, after months of testing, tweaking and shouting at the screen alongside Yi Ren (a very able AI engineer), I’ve come to accept that writing prompts for AI is less like engineering and more like parenting:

- Be specific, but not too specific.

- Break things down as if you were actually giving instructions to a 7-year-old.

- Expect to be ignored occasionally.

- Plan for tantrums and the silent treatment.

- And no matter how much logic you apply, sometimes it just does what it wants.

Although with kids, at least you can sometimes bribe them with biscuits.

Have you tried working with LLMs? Does yours also seem to have a mind of its own?

Want to know more about Kiki? Follow us on:

Or signup to our waitlist to be one of the first to get Kiki into your life.